Mule High Availability (HA) Cluster Overview

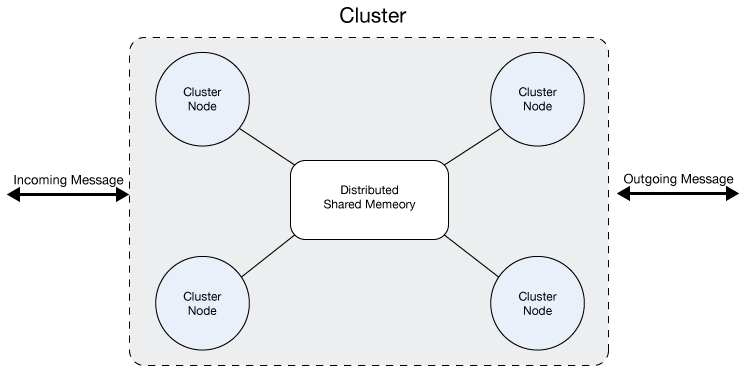

A cluster is a set of Mule instances that acts as a unit. In other words, a cluster is a virtual server composed of multiple nodes. The servers in a cluster communicate and share information through a distributed shared memory grid. This means that the data is replicated across memory in different physical machines.

The Benefits of Clustering

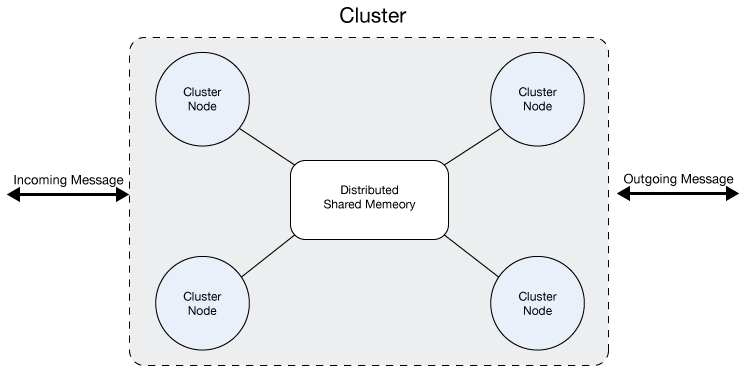

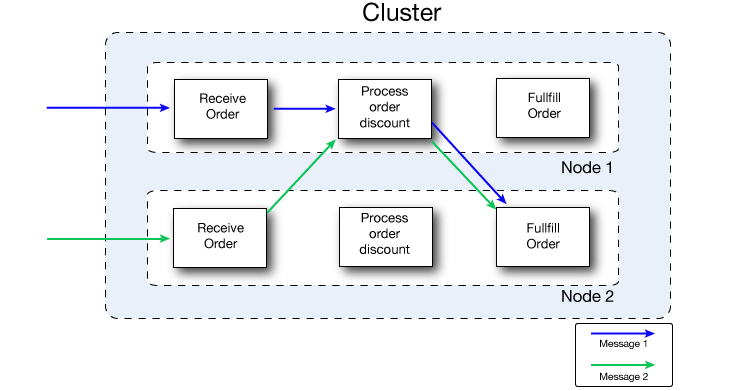

Clustering together Mule ESB servers ensures high system availability. If a node becomes unavailable due to failure or planned downtime, another node in the cluster can assume the workload and continue to process existing events and messages. The following figure illustrates the processing of incoming messages by a cluster of two nodes. Notice that the processing load is balanced across nodes – Node 1 processes message 1 while Node 2 simultaneously processes message 2.

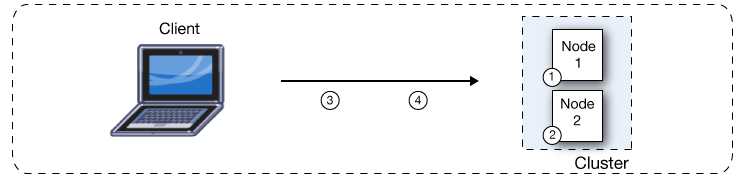

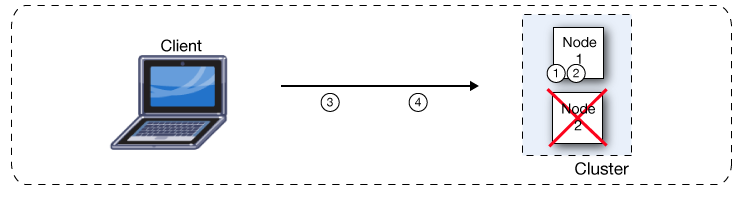

If one node fails, the other available nodes pick up the work of the failing node. As shown in the following figure, if Node 2 fails, Node 1 processes both message 1 and message 2.

Because, all nodes in a cluster process messages simultaneously, clusters can also improve performance and scalability. Compared to a single node instance, clusters can support more users or improve application performance by sharing the workload across multiple nodes or by adding nodes to the cluster.

The following figure illustrates workload sharing in more detail. Both nodes process messages related to order fulfillment. However, when one node is heavily loaded, it can move the processing for one or more steps in the process to another node. Here, processing of the Process order discount step is moved to Node 1, and processing of the Fulfill order step is moved to Node 2.

Beyond benefits such as high availability through automatic failover, improved performance, and enhanced scalability, clustering Mule instances offers the following benefits:

-

Automatic coordination of access to resources such as files, databases, and FTP sources. Mule automatically manages which node will handle communication from a data source.

-

Automatic load balancing of processing within a cluster. If you divide your flows into a series of steps and connect these steps with a transport such as VM, each step is put in a queue, making it cluster enabled. Mule can then process each step in any node, and so better balance the load across nodes.

-

Cluster lifecycle management and control. See Managing Mule High Availability (HA) Clusters.

-

Cluster and node performance monitoring. See Monitoring a Cluster.

-

Raised alerts. You can set up an alert to appear when a node goes down and when a node comes back up. See Working With Alerts.

| All Mule instances in a cluster actively process messages. Note that Mule is internally scalable – a single Mule instance can scale easily by taking advantage of multiple cores. Even when a Mule instance takes advantage of multiple cores, it still operates as a single server or node in a cluster. |

Cluster Support for Transports

All Mule transports are supported within a cluster. Because of differences in the way different transports access inbound traffic, the details of this support very. In general, outbound traffic acts the same way inside and outside a cluster – the differences are highlighted below.

Mule supports three basic types of transports:

-

Socket-based transports read input sent to network sockets that Mule owns. Examples include TCP, UDP, and HTTP[mmc:S].

-

listener-based transports read data using a protocol that fully supports concurrent multiple accessors. Examples include JMS and VM.

-

resource-based transports read data from a resource that allows multiple concurrent accessors, but does not natively coordinate their use of the resource. For instance, suppose multiple programs are processing files in the same shared directory by reading, processing, and then deleting the files. These programs must use an explicit, application-level locking strategy to prevent the same file from being processed more than once. Examples of resource-based transports include File, FTP, SFTP, E-mail, and JDBC.

All three basic types of transports are supported in clusters in different ways, as described below.

-

Socket-based

-

Since each clustered Mule instance runs on a different network node, each instance receives only the socket-based traffic sent to its node. Incoming socket-based traffic should be mmc:load balanced to distribute it among the clustered instances.

-

Output to socket-based transports is written to a specific host/port combination. If the host/port combination is an external host, no special considerations apply. If it is a port on the local host, consider using that port on the load balancer instead to better distribute traffic among the cluster.

-

-

Listener-based

-

Listener-based transports fully support multiple readers and writers. No special considerations apply either to input or to output.

-

Note that, in a cluster, VM transport queues are a shared, cluster-wide resource. The cluster will automatically synchronize access to the VM transport queues. Because of this, a message written to a VM queue can be processed by any cluster node. This makes VM ideal for sharing work among cluster nodes.

-

-

Resource-based

-

Mule HA Clustering automatically coordinates access to each resource, ensuring that only one clustered instance accesses each resource at a time. Because of this, it is generally a good idea to immediately write messages read from a resource-based transport to VM queues. This allows the other cluster nodes to take part in processing the messages.

-

There are no special considerations in writing to resource-based clustered transports:

-

When writing to file-based transports (File, FTP, SFTP), Mule will generate unique file names.

-

When writing to JDBC, Mule can generate unique keys.

-

Writing e-mail is effectively listener-based rather than resource-based.

-

-

Clustering and Reliable Applications

High-reliability applications (ones that have zero tolerance for message loss) not only require the underlying ESB to be reliable, but that reliability needs to extend to individual connections. Reliability Patterns give you the tools to build fully reliable applications in your clusters.

The Mule 3.2 documentation provides code examples that show how you can implement a reliability pattern for a number of different non-transactional transports, including HTTP, FTP, File, and IMAP. If your application uses a non-transactional transport, follow the reliability pattern. These patterns ensure that a message is accepted and successfully processed or that it generates an "unsuccessful" response allowing the client to retry.

If your application uses transactional transports, such as JMS, VM, and JDBC, use transactions. Mule’s built-in support for transactional transports enables reliable messaging for applications that use these transports.

These actions can also apply to non-clustered applications.

Clustering and Load Balancing

When Mule clusters are used to serve TCP requests (where TCP includes SSL/TLS, UDP, Multicast, HTTP, and HTTPS), some load balancing is needed to distribute the requests among the clustered instances. There are various software load balancers available, two of them are:

-

Nginx, an open-source HTTP server and reverse proxy. You can use nginx’s HttpUpstreamModule for HTTP(S) load balancing. You can find further information in the Linode Library entry Use Nginx for Proxy Services and Software Load Balancing.

-

The Apache web server, which can also be used as an HTTP(S) load balancer.

There are also many hardware load balancers that can route both TCP and HTTP(S) traffic.

Example Application

A simple example application, Widget Factory, is provided with Mule Enterprise to illustrate the use of clusters for high availability. This example is located in the directory examples/widget under your Mule home directory. Refer to the README.txt file in that directory for information on running the example.

Best Practices

There are a number of recommended practices related to clustering. These include:

-

As much as possible, organize your application into a series of steps where each step moves the message from one transactional store to another.

-

If your application processes messages from a non-transactional transport, use a reliability pattern to move them to a transactional store such as a VM or JMS store.

-

Use transactions to process messages from a transactional transport. This ensures that if an error is encountered, the message will be reprocessed.

-

Use distributed stores such as those used with the VM or JMS transport – these stores are available to an entire cluster. This is preferable to the non-distributed stores used with transports such as File, FTP, and JDBC – these stores are read by a single node at a time.

-

Use the VM transport to get optimal performance. Use the JMS transport for applications where data needs to be saved after the entire cluster exits.

-

Create the number of nodes within a cluster that best meets your needs.

-

Implement reliability patterns to create high reliability applications.

Prerequisites and Limitations

-

Currently you can create a cluster consisting of at least two servers and up to a maximum of four servers. However, each server must run in a different physical (or virtual) machine.

-

To serve TCP requests, some load balancing across a Mule cluster is needed. See Clustering and Load Balancing for more information about third-party load balancers that you can use. You can also load balance the processing within a cluster by separating your flows into a series of steps and connecting each step with a transport such as VM. This cluster enables each step, allowing Mule to better balance the load across nodes.

-

Multicasting must be enabled for each server in the cluster. This enables the instances to find each other.

Licensing for Clusters

Each Mule server that you use for clustering must include a license that enables clustering. If a license that enables clustering is not installed, clustering will be disabled for that server. You can obtain a license that enables clustering from your MuleSoft sales representative.

For information on installing the license see [mmc:Installing a Commercial License].