Studio Visual Editor

-

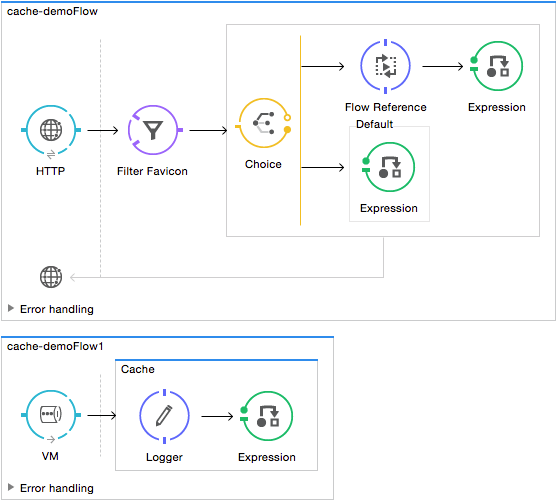

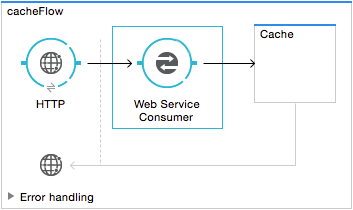

Drag and drop the cache scope icon from the palette into a flow on your canvas.

-

Drag one or more processors from the palette into the cache scope to build a chain of processors to which Mule applies the caching strategy. A cache scope can contain any number of message processors.

-

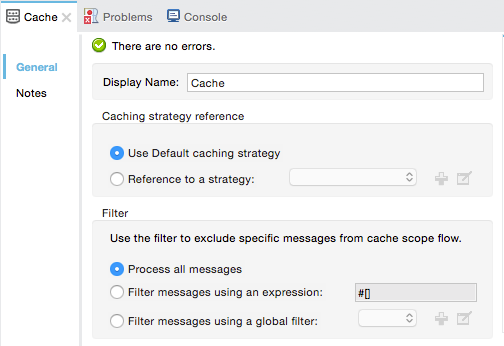

Open the processor’s Properties Editor, then configure the fields per the table below.

Field Descriptions

-

Display Name field - Value is

Cache- Customize to display a unique name for the scope in your application.Example:

doc:name="Cache" -

Caching strategy reference

-

Use Default caching strategy (Default) - Select if you want the cache scope to follow Mule’s default caching strategy.

-

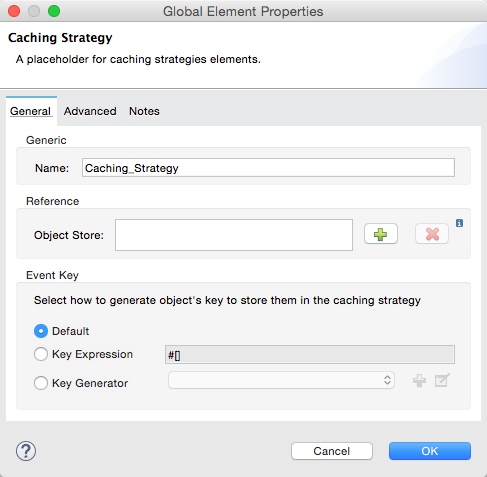

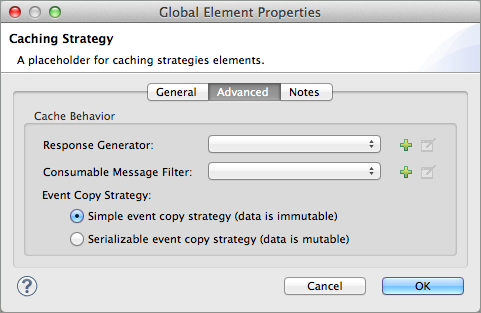

Reference to a strategy - Select to configure the cache scope to follow a global caching strategy that you have created; select the global caching strategy from the drop-down menu or create one by clicking the green plus sign.

Example:

cachingStrategy-ref="Caching_Strategy"

-

-

Filter field:

-

Process all messages (Default) - Select if you want the cache scope to execute the caching strategy for all messages that enter the scope.

-

Filter messages using an expression - Select if you want the cache scope to execute the caching strategy ONLY for messages that match the expression(s) defined in this field.

If the message matches the expression(s), Mule executes the caching strategy.

If the message does not match expression(s), Mule processes the message through all message processors within the cache scope; Mule never saves nor offers cached responses.

Example:

filterExpression="#[user.isPremium()]" -

Filter messages using a global filter - Select if you want the cache scope to execute the caching strategy only for messages that successfully pass through the designated global filter.

If the message passes through filter, Mule executes the caching strategy.

If the message fails to pass through filter, Mule processes the message through all message processors within the cache scope; Mule never saves nor offers cached responses.

Example:

filter-ref="MyGlobalFilter"

-

XML Editor or Standalone

-

Add an

ee:cacheelement to your flow at the point where you want to initiate a cache processing block. Refer to the code sample below. -

Optionally, configure the scope according to the tables below.

Element Description ee:cacheUse to create a block of message processors that process a message, deliver the output to the parent flow, and cache the response for reuse (according to the rules of the caching strategy.)

Field Descriptions

-

doc:name- Customize to display a unique name for the cache scope in your application.Note: Attributes are not required in Mule Standalone configuration.

-

filterExpression- (Optional) Specify one or more expressions against which the cache scope should evaluate the message to determine whether the caching strategy should be executed. -

filter-ref- (Optional) Specify the name of a filtering strategy that you have defined as a global element. This attribute is mutually exclusive with filterExpression. -

cachingStrategy-ref- (Optional) Specify the name of the global caching strategy that you have defined as a global element. If nocachingStrategy-refis defined, Mule uses the default caching strategy.

Define Processing Within the Scope

Add nested elements beneath your ee:cache element to define what processing should occur within the scope. The cache scope can contain any number of message processors as well as references to child flows.

<ee:cache doc:name="Cache" filter-ref="Expression" cachingStrategy-ref="Caching_Strategy">

<some-nested-element/>

<some-other-nested-element/>

</ee:cache>